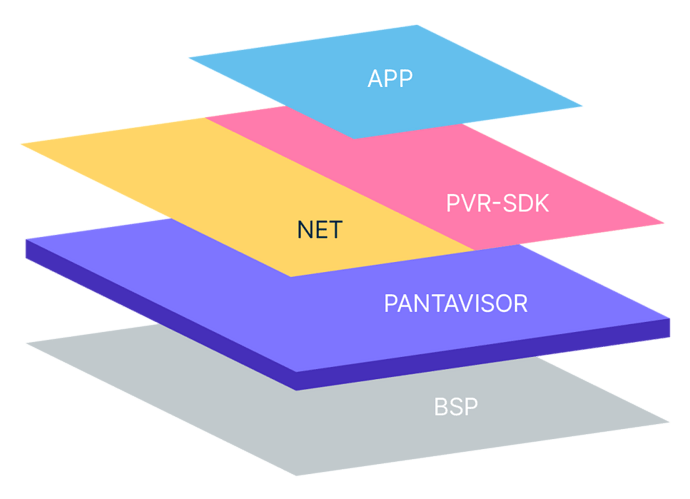

First, let’s do a quick refresh on Pantavisor Linux architecture, the idea behind Pantavisor Linux is to build the full OS using containers as the building blocks. This will allow the creation of a reproducible state for the device system. In that way the system will look something like this:

In this image we can see the network management is done by a container, then there is the pvr-sdk which is a container used in development to manage the device in the local network environment, ssh into the device, and another couple of development tasks that can be run on the device. Then we have in an upper layer the main app that is running the device functionality.

How pantavisor manages drivers

Pantavisor offers a mechanim for BSPs to define which (abstract) drivers they support. For that the bsp can specify a list of such abstract drivers and - if needed - map each of those to a list of kernel modules that can be loaded by pantavisor as needed. Driver loading can be parameterized and those parameters can be dynamically sourced from user-meta.

Containers on the other hand can express what drivers they need by referencing one of such driver names and pantavisor will mediate loading the drivers as needed.

By offering this alias mechanism, product and platform builders can add a level of abstraction that allows multiple BSP to suffice the requirements of containers by loading the appropriate modules.

For example, the rockpi4 uses the brcmfmac or rtl8xxxu driver for wifi depending on the board version while the rpi4 uses brcmfmac for wifi. Using an abstract driver’s name, a container that supports managing wifi for the standard Linux kernel framework can request that a BSP must have “wifi” driver support, and the BSPs can specify that they offer “wifi” driver support. In this way, pantavisor can do the loading and also prevent containers from being deployed if no wifi support is available.

How does this work inside the pantavisor configuration

For this to work, the BSP configuration needs to declare a drivers.json which defines all the drivers available to be loaded by the containers, and the containers need to declare the drivers that need the pantavisor to load for the container.

First lest’s talk about the keys inside the drivers.json

| Key | Value | Description |

|---|---|---|

| spec | driver-aliases@1 | version of the drivers JSON |

| all | list of default drivers in the kernel | list of managed driver JSONs |

| dtb:NAME | list of drivers by DTB package (optional) | list of managed driver JSONs |

| ovl:NAME | list of drivers expose via overlay (experimental) | list of managed driver JSONs |

This is an example of a drivers.json

{

"#spec": "driver-aliases@1",

"all": {

"bluetooth": [

"hci_uart",

"btintel"

],

"wifi": [

"iwlwifi ${user-meta:drivers.iwlwifi.opts}"

]

},

"dtb:broadcom/bcm2711-rpi-4-b.dtb": {

"wifi": [

"cfg80211 ${user-meta:drivers.cfg80211.opts}",

"brcmfmac ${user-meta:drivers.brcmfmac.opts}"

]

}

}

In this example we can see, a BSP that declares two drivers:

-

bluetooth: Uses two kernel modules

hci_uartandbtintelthat will be loaded when one container has that driver as a requirement. -

wifi: for the wifi driver we can see a there is a default wifi driver but if the board load the

broadcom/bcm2711-rpi-4-b.dtb

Let’s take a closer look at the wifi driver. First, we can see after the modulo load we have a template variable like ${user-meta:drivers.iwlwifi.opts} this defines optional parameters to be passed when the module is loaded, using either the user-meta or the device-meta plus the key used to read the extra parameters.

now, we need to check how a container to pantavisor loads a driver inside the run.json of the container.

{

"#spec": "service-manifest-run@1",

"config": "lxc.container.conf",

"group": "root",

"name": "awconnect",

"root-volume": "root.squashfs",

"storage": {

"docker--etc-NetworkManager-system-connections": {

"persistence": "permanent"

},

"lxc-overlay": {

"persistence": "boot"

}

},

"type": "lxc",

"volumes": [],

"drivers": {

"manual": [],

"optional": [

"wifi",

"usbnet",

"bluetooth"

],

"required": []

}

}

In this example, the important key is the drivers key, and in there we have 3 other keys:

- manual: list of drivers that are going to be loaded manually via a pvcontrol API call, this is an experimental feature.

- optional: list of drivers that are going to be loaded by pantavisor if they are available in the BSP. But because they are optional, the container won’t fail at the initialization process if those drivers don’t exist on the BSP.

- required: list of drivers that are going to be loaded by pantavisor if they are available in the BSP. But because they are required, the container won’t fail at the initialization process if those drivers don’t exist on the BSP.

Another way of creating and maintaining the run.json is using PVR CLI, and for that, we can change the src.json inside the container’s definition.

And inside the args key add the arguments:

-

PV_DRIVERS_OPTIONAL: an array of optional drivers in the same way as the optional key in the

run.json -

PV_DRIVERS_MANUAL: an array of optional drivers in the same way as the manual key in the

run.json -

PV_DRIVERS_REQUIRED: an array of optional drivers in the same way as the required key in the

run.json

Example

Using the our Raspberry Pi 64 image (download, device), we get a BSP with the next drivers.json

{

"#spec": "driver-aliases@1",

"all": {

"bluetooth": [

"btbcm",

"hci_uart"

],

"wifi": [

"brcmutil",

"brcmfmac"

],

"wireguard": [

"wireguard"

]

},

"dtb:all": {}

}

Exposing the wifi, Bluetooth, and wireguard drivers, if we are going to install the tailscale container using PVR.

pvr app add registry.gitlab.com/highercomve/ph-tailscale:master tailscale

After this, we need to modify the tailscale/src.json file and add the PV_DRIVERS_REQUIRED arguments to load the wireguard module from pantavisor.

Result:

{

"#spec": "service-manifest-src@1",

"args": {

"PV_DRIVERS_REQUIRED": [

"wireguard"

]

},

"docker_config": {

"ArgsEscaped": true,

"Cmd": [

"/sbin/init"

],

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

],

"Volumes": {

"/var/lib/tailscale": {}

}

},

"docker_digest": "registry.gitlab.com/highercomve/ph-tailscale@sha256:c9e5b521206bb1d2c9d5dbd5bf2fe3f561013acb3d131949b8955ccfbdf04d40",

"docker_name": "registry.gitlab.com/highercomve/ph-tailscale",

"docker_platform": "linux/arm",

"docker_source": "remote,local",

"docker_tag": "master",

"template": "builtin-lxc-docker"

}

Where the wireguard driver is defined as “required” to tailscale to work. if the driver is not available tailscale is going to fail at initialization.

After adding the drivers arguments inside the src.json we need to install the container to generate the run.json again

pvr app install tailscale

And as a result the new run.json will be:

{

"#spec": "service-manifest-run@1",

"name":"tailscale",

"config": "lxc.container.conf",

"drivers": {

"manual":[],

"required": ["wireguard"],

"optional":[]

},

"group": "app",

"storage":{

"docker--var-lib-tailscale": {

"persistence": "permanent"

},

"lxc-overlay" : {

"persistence": "boot"

}

},

"type":"lxc",

"root-volume": "root.squashfs",

"volumes":[]

}

Conclusions

The idea behind the driver’s declaration language inside pantavisor is to allow the abstraction of the container drivers’ requirements and the BSP available drivers and remove the need to manually load the kernel modules need it inside the container’s logic. And allow the BSP providers to support more boards with the same BSP using different drivers depending on the board and exposing them as one driver for the different hardware.